This post is the third in a series produced for a Harvard Kennedy School field class on innovation in government. Our team is working with the Census Bureau. You can read about our project here and about our experience interviewing and engaging Census users here.

Previously, we talked about the importance of interviewing and observing people using Census data. This week, we have been working to find the meaning in our research. What did we find, and why does it matter? And what are the similarities and differences among the experiences of the 18 different people we interviewed?

One of our most interesting findings is that some Census users need assurance they are using data properly.

Here’s how we got there:

Step 1. We summarized our interviews into “one-pagers.” After each user interview, we documented our findings. The summary of our conversation with Denise-Marie Ordway, a reporter who worked with the Orlando Sentinel and The Philadelphia Inquirer, is below. Denise told us that she is unlikely to use data if she can’t check her interpretation of it with an expert.

Interview summary for Denise-Marie Ordway, Journalist.

Step 2. We identified attitudes and behaviors by user group. After reading the interview notes for each conversation, we wrote down interesting behaviors and attitudes on post-it notes. To avoid bias, we each read interview summaries for users we did not personally speak to.

Consistent with our initial methodology of splitting users into professional groups, we placed the post-it notes under three green headings – journalists, NGOs and academics (pictured below). We wrote Denise’s comment that she is unlikely to use data if she can’t check her interpretation on a pink post-it note and placed it under the heading “Journalist.”

Nidhi and Aaron arrange insights under user groups for an initial assessment of attitudes and behavior.

Step 3. We grouped insights by theme. We removed the green user group post-its and began to re-arrange the pink post-it notes in themes (pictured below). We immediately saw that there were common themes across groups, shared behaviors, and similar pain points. These themes are documented on the blue post-it notes.

Peter rearranges insights in themes in order to help us visualize key insights across user groups.

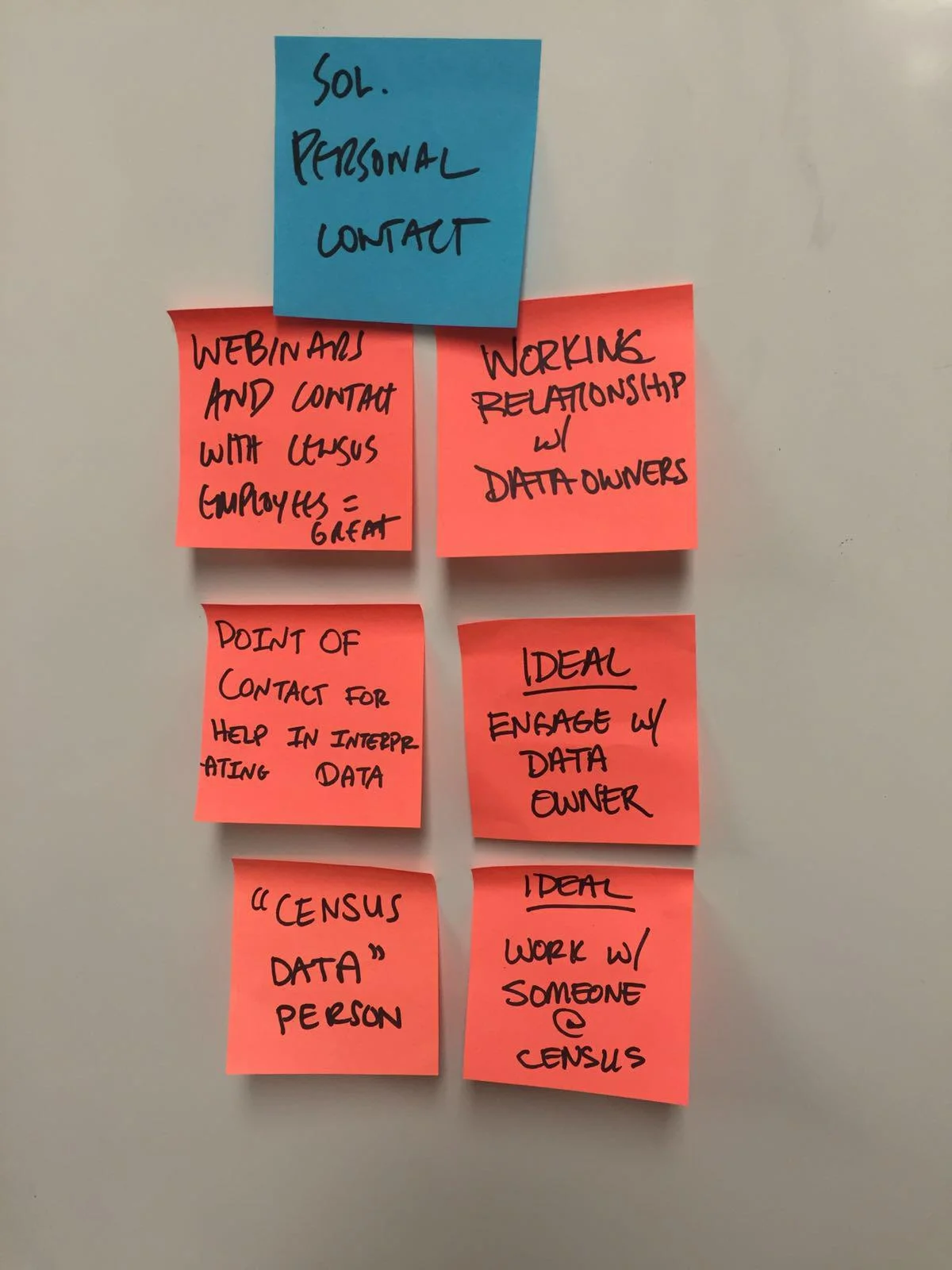

Step 4. We generated key insights. We now had eighteen themes on the board. Denise’s comment was now located under the heading “Solution – Personal Contact.” There were five other post-its with similar concepts, including: “Ideal would be to engage with data owner at the Census,” “Ideal would be to work with someone at the Census,” and “Would like a point of contact for help in interpreting data” (see below).

Post-it notes across user groups related to “Solution – Personal Contact”

Denise’s comment was not an outlier! We knew we had discovered something important. Thinking back to our original conversation with Denise, we realized that part of the desire for personal contact was related to her lack of confidence in interpreting the data. We had arrived at a key insight: Census users need assurance they are using data properly.

Next up: We are finishing our research phase and using our insights to develop user personas. We plan to visit the Census Bureau in Washington, D.C., brainstorm potential solutions, and test them with users. We’re looking forward to collaborating with Ben Willman, Director of Strategy at the Presidential Innovation Fellows program, on our prototyping approach.

Luciano Arango, Nidhi Badaya, Aaron Capizzi, Rebecca Scharfstein, Peter Willis